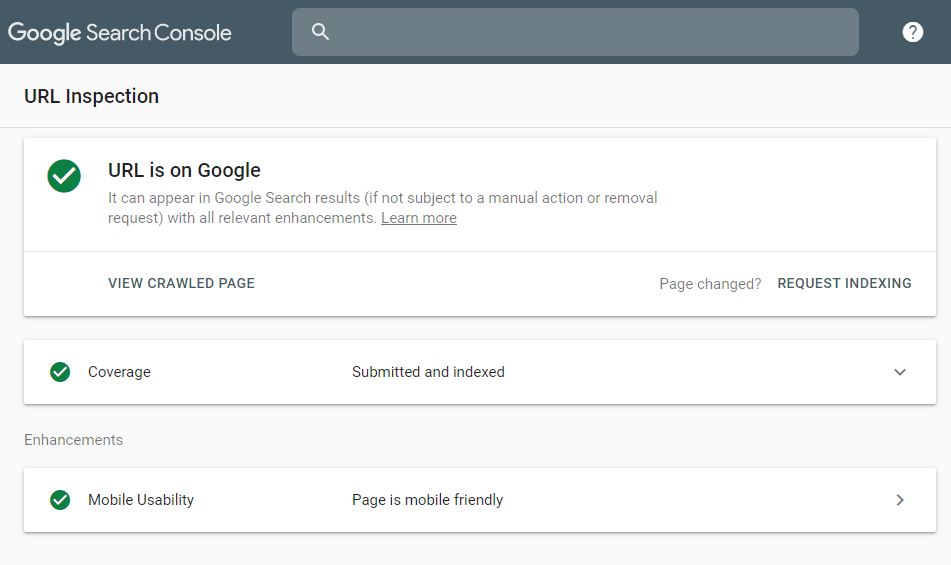

The URL Inspection Tool is one of the most useful reports from the newest version of Google Search Console. There is so much great information to unpack and to debug your presence in Google Search. But at a fundamental level, it answers two important questions:

- Has Google found a specific URL from my site?

- Is that URL indexed?

Although I don’t use the URL Inspection tool every day, it definitely gives me a peek into how Google sees a small section of my sites. However, I didn’t build this script to help me out with my workload. I’ve built it because I wanted to improve another open source project from a person I respect deeply, but when he launched it I didn’t have the coding abilities to do so. That person is the great Hamlet Batista who unfortunately passed away only a few days ago (January 2021).

I am not going to lie, this is mostly an exercise for me to channel my grief. To express my appreciation for the teacher and the man that Hamlet was, and of course, to share with the SEO Community. Because I think that’s what Hamlet wanted from us. To share and improve together.

I’ve divided this post into four parts so it’s your choice to read it all or jump to the specific part you’re interested in:

- Why have I built this script

- How does the script work

- How to install and run the script

- Final thoughts

Why have I built this script

Back in April 2019, Hamlet Batista wrote an article in SEJ called “How to Automate the URL Inspection Tool with Python & JavaScript”. I was fascinated by the tool, as I didn’t know about any solutions that could extract this data programmatically. But mostly I was impressed by how easily Hamlet explained the way he created the script.

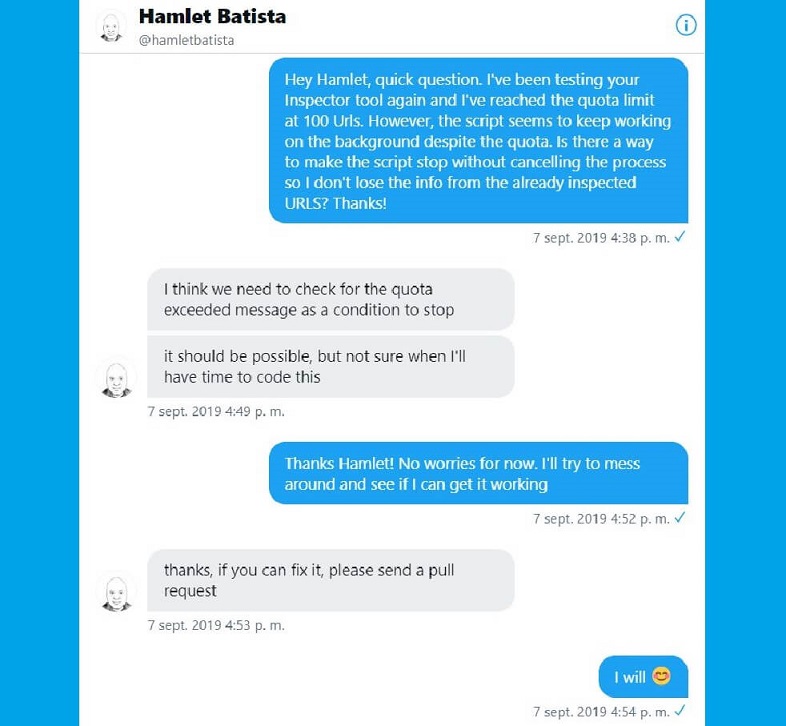

After a few tests, I found a couple of potential bugs and I wrote to Hamlet to see if he encountered the same issues. Even though we didn’t know each other before and I am a nobody in the SEO community, he was kind enough to reply:

Back then, I started to learn some JavaScript, and had zero experience with Python. After trying for a while, I gave up trying to modify his script in Python because I didn’t know what I was doing. But because he was using Puppeteer, a JavaScript library, I figured I could replicate his script using JavaScript.

This seemingly arbitrary challenge made me focus all my energy on learning JavaScript so one day I could improve the script he built and add my small grain of sand to his contribution.

Fast-forward to December 2020, Hamlet and I had a Zoom call to talk about collaborating in his #RSTwittorial series and I had a few ideas that I wanted to share with him, including the JS version of his script that I built a long time ago but I thought it wasn’t good enough to share.

When I showed him he was so happy that I tried to do it on my own, and although we agreed on using another script for the future #RSTwittorial webinar, he encouraged me to share it with the SEO community. So I agreed with him to share it at the same time I was going to do the webinar (April 2021). That way I could share two of my scripts and hopefully fix a few of the features I had in my backlog for the JS version of his script.

Hamlet was that kind of man, he encouraged you to believe in yourself.

My experience wasn’t unique by any means. He shared the work of so many people in his articles. He always commented on the work people shared on Twitter. Even after he passed, I could read it on all the messages people wrote on twitter about him, the wonderful article Lilly Ray wrote on SEJ and later on I heard similar testimonials in the memorial Lilly put together to honour him.

I think it’s no secret that he was a big believer in making our industry smarter by showing other people what could be done with programming. Especially using his favourite language Python, and motivating others to do bigger and better things. Maura Loew, who runs Operations at Ranksense and worked really closely with him, said in the memorial that 5 years ago, Hamlet told her that he wanted to start a movement in the SEO industry. And he sure did!

I want to be part of that movement. One where we are kinder and elevate each other. Where we aren’t afraid to learn publicly and share our experience building scripts or simply just doing SEO better so we can collectively get smarter.

With all that said, here is what I promised. I hope you like it!

How does the script work

Although Puppeteer was my first choice, I wanted to try something new that could do the job but allowed a few more configuration options. Meet Playwright, a Headless-browser library for Node.js built by a few former members of the Puppeteer team that now work at Microsoft.

It works similarly to Puppeteer but one of the key differences is that it allows you to use different browsers, like Firefox.

The mechanism of the script is quite simple. There are two files that you need for the script to work:

- A “urls.csv” file containing a list of urls from a site that it’s verified in your Search Console account.

- Update the “credentials.js” file with the email address you use for Search Console, the password, and the URL from the property you’d like to check.

Create a “urls.csv” file

You can create this directly on your IDE of choice or simply create it on Excel and save it in the same folder where the script is. Add the URLs that you would like to check into the file and make sure that they are from the same GSC property.

Update the credentials.js file

For the script to work, you need to add your Google Search Console credentials. Edit the variables with your email, password and URL/property that you would like to check and you are ready to go.

How to install and run the script

First, make sure that you have Node.js in your machine. At the time of writing this post I’m using version 14.15.4 but it should work with most versions.

Download the script using git, Github’s CLI or simply downloading the code from Github directly.

# Git

git clone https://github.com/jlhernando/url-inspector-automator-js.git

# Github’s CLI

gh repo clone https://github.com/jlhernando/url-inspector-automator-jsThen install the necessary modules to run the script by typing npm install in your terminal

npm installOnce you have it on your machine, make sure you have followed the steps in the previous section. Create the list of URLs you want to check and that you have updated the credential.js file with your Search Console credentials. After that use your terminal and type npm start to run the script.

npm start

By default, the script runs in headless mode, but if you’d like to see the script in action like in the example above, simply change the launch option to headless: false.

The output

When the script has finished, you will see two new files in your folder: a “results.csv” file and a “results.json” file. Both files will contain the following information but in different formats for convenience:

- - The index state of your URL as reported by GSC (Coverage).

- - The description of the index state.

- - The last reported date & time Googlebot crawled your URL.

- - If the URL was found in your XML sitemap.

- - The referring pages Googlebot found linking to your URL.

- - The Canonical URL declared by the canonical tag in your page (if any).

- - The Canonical URL Google has chosen.

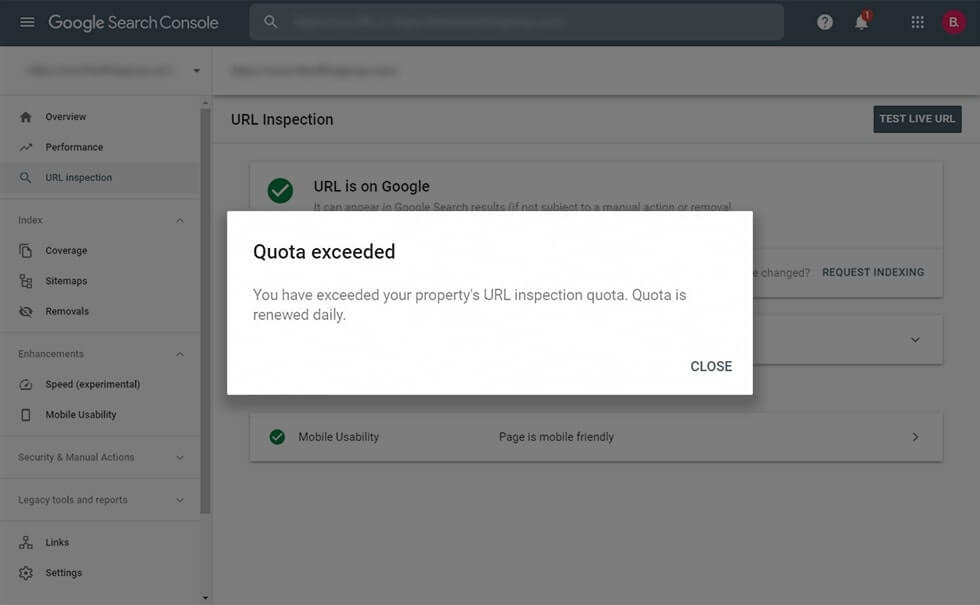

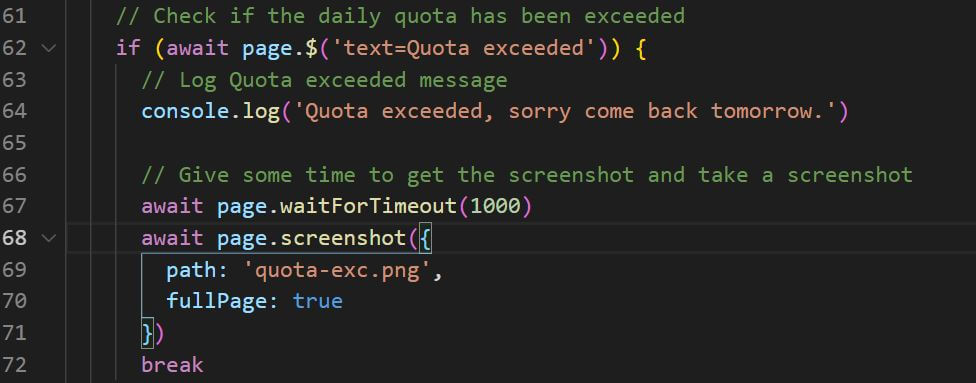

There is one more thing that you may see if you try to check more than 100 URLs. A screenshot of the “quota exceeded” message from Search Console:

This small condition is exactly what I wanted to change in Hamlet’s code but I couldn’t. I finally understood what was necessary to create it.

Final thoughts

I’d like to think that Hamlet would be happy to see how I ended up contributing to his script and shared it with the community. I think I have been holding back on sharing because deep down I believe I can always make things better. And mostly, it is true.

You can always make things better. However, we need to accept, as individuals, we are limited by time, knowledge, and experience. Sharing can also be scary, as it opens you to vulnerability and potential ridicule. But I prefer taking a page from Hamlet’s book and start sharing more. Because by sharing you put extra effort in understanding how things work. You can contribute to other people’s work and motivate them to also build and share new things, including contributing to your own ideas.

I hope you find this script useful, and that you’ll join the movement too. Learn more, build more, share more. #DONTWAIT

If you want to read about everything that Hamlet wrote and created, there is a new platform created by Charly Wargnier called SEO Pythonistas that I’d recommend you to check.

Also, don’t forget to check out his company Ranksense, an innovative way to do SEO changes faster using Cloudflare workers.