In my previous article I talked about how to get indexing information in bulk from Google Search Console using the URL Inspection Tool and Node.js. This tool is great to gather individual information about specific URLs in your site. However, Google also provides site owners with a more holistic view of the indexing status of their sites with the Index Coverage Report.

You can check Google’s own documentation and video tutorial to understand in more detail the data this section provides, but at a very top level the key data points are:

- The amount of pages that Google has indexed.

- The amount of pages that Google has found but has not indexed (either because of an error or purposefully excluded).

- How big your site is from Google’s point of view (Valid + Excluded + Errors).

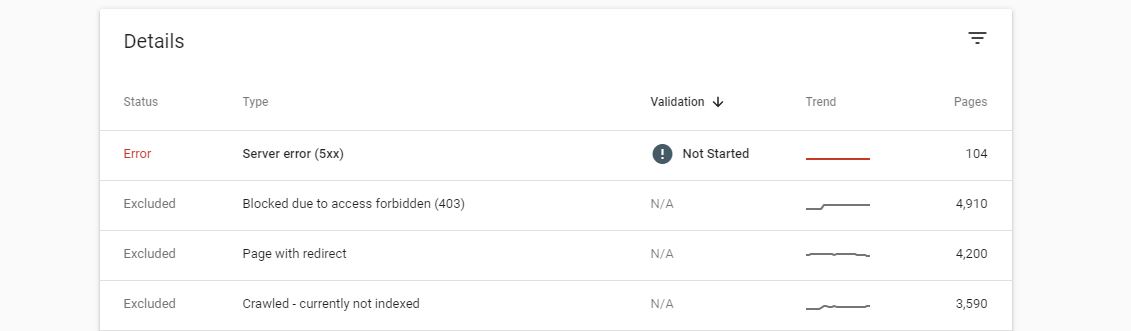

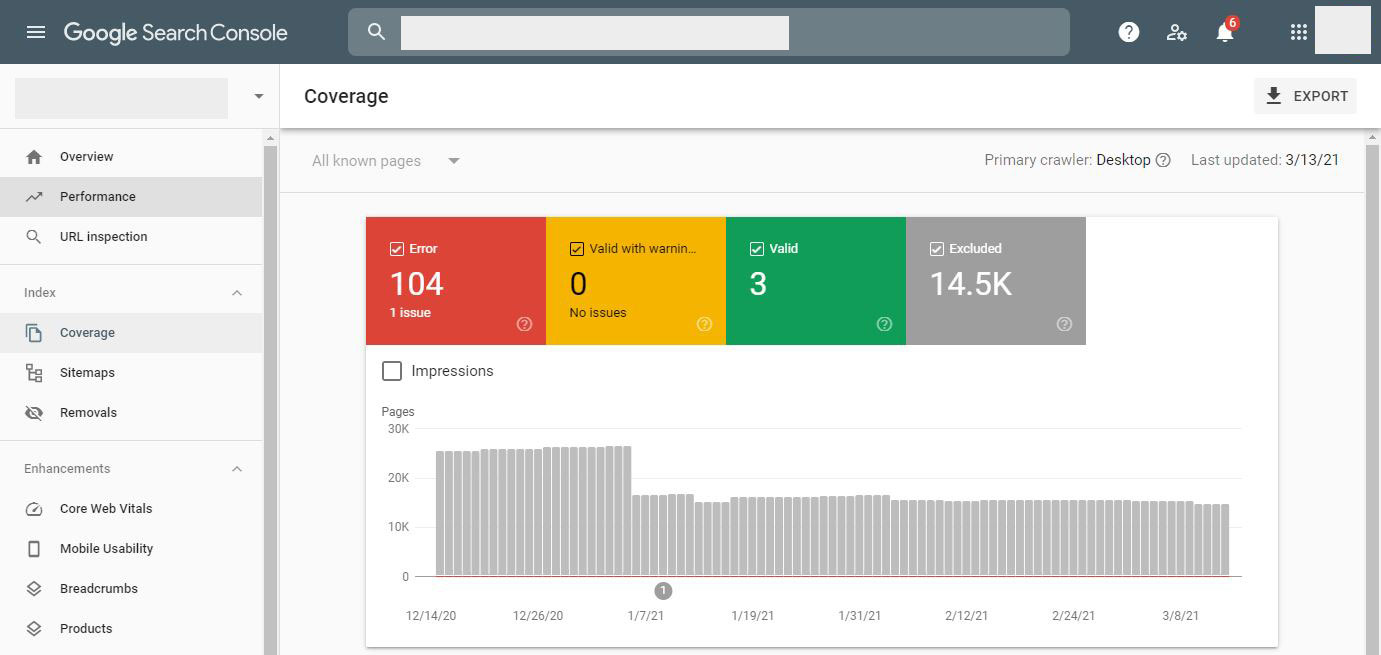

Right now there are four main categories: Errors, Valid with warning, Valid and Excluded subdivided into 29 subcategories. Each of these subcategories provide an additional level of classification to help site owners and SEOs understand why your URLs belong in the main category. Not all subcategories will be visible, only the ones that apply to your site.

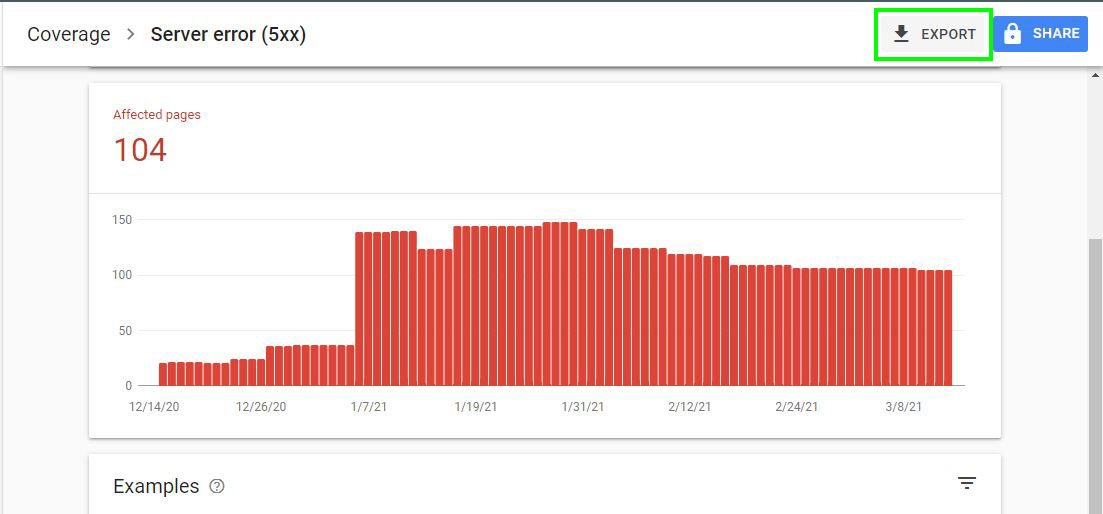

Unfortunately, the export option on the Index Coverage Report view (pictured above) only gives you the top level numbers per report. If you want to know and export which URLs are inside the multiple reports, you have to click on each report and export them one by one.

This way to extract the data is very manual and time consuming. Hence, I decided to automate it with Node.js and add it a few more features.

Installing and running the script

Make sure that you have Node.js in your machine. At the time of writing this post I’m using version 14.16.0. In this script I'm using a specific syntax that can only be used from version 14 onwards so double check that you are above that version.

# Check Node version

node -v

Download the script using git, Github’s CLI or simply downloading the code from Github directly.

# Git

git clone https://github.com/jlhernando/index-coverage-extractor.git

# Github’s CLI

gh repo clone https://github.com/jlhernando/index-coverage-extractor

Then install the necessary modules to run the script by typing npm install in your terminal

npm install

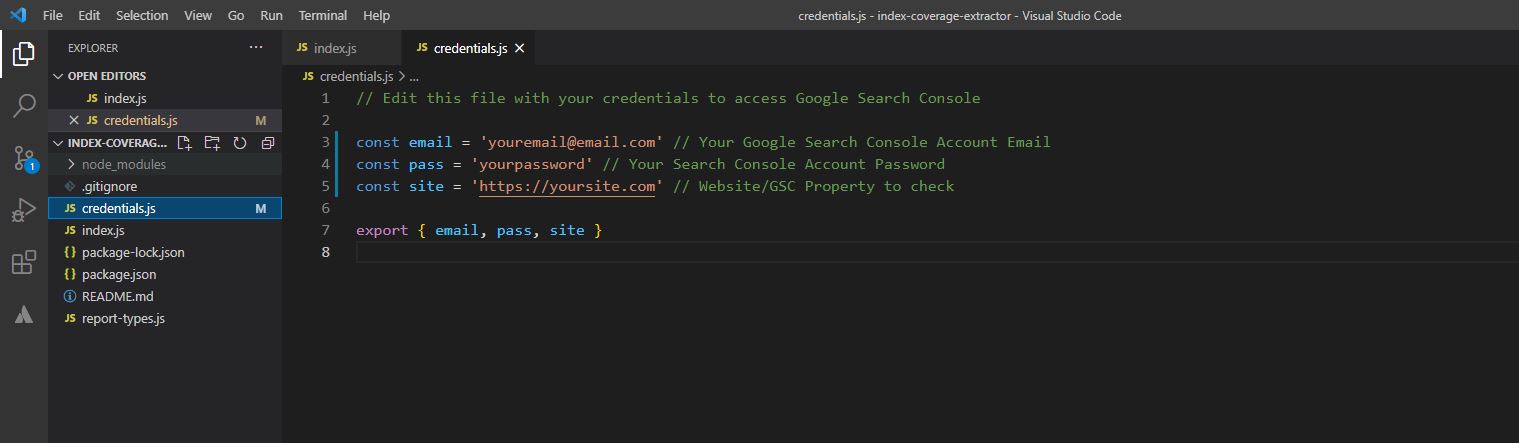

In order to extract the coverage data from your website/property update the credential.js file with your Search Console credentials.

After that use your terminal and type npm start to run the script.

npm start

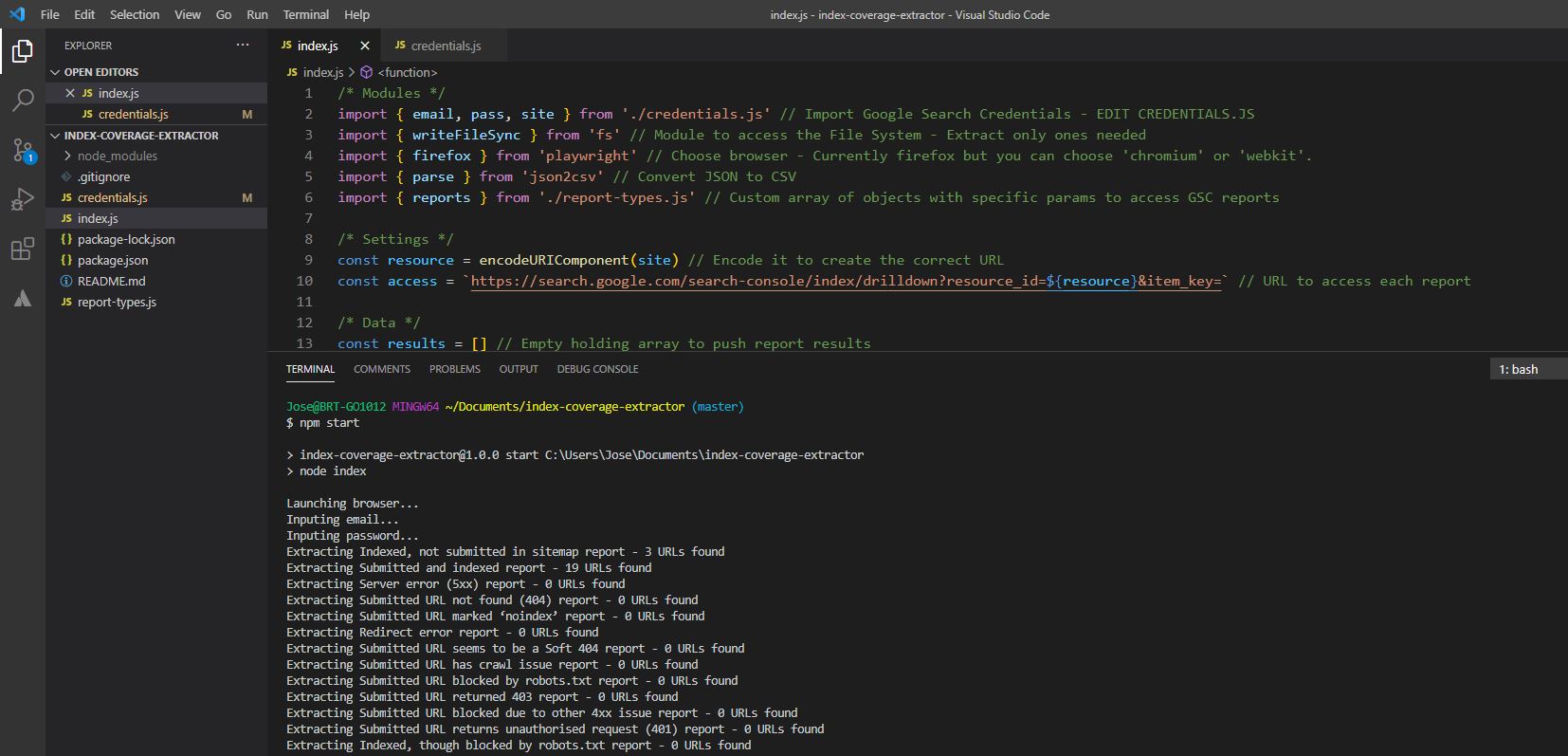

The script logs the processing in the console so you are aware of what is happening.

Like in the URL Inspection Automator, the script uses Playwright and runs in headless mode. If you want to see the browser automation in action, simply change the launch option to headless: false in the index.js file and save it before running the script.

The output

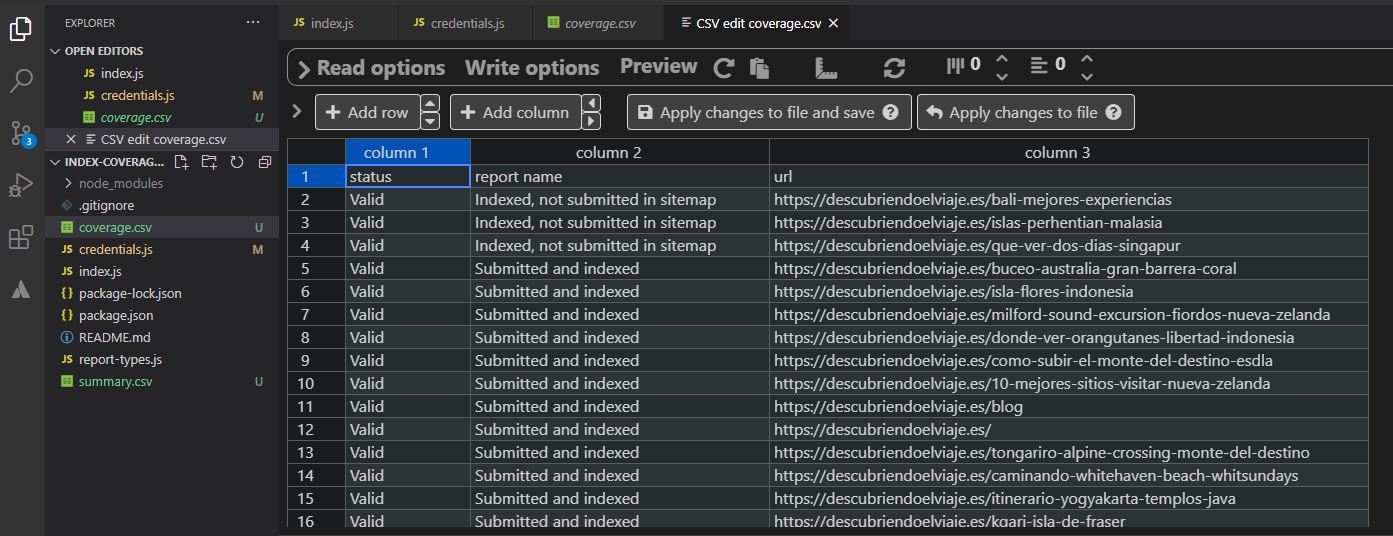

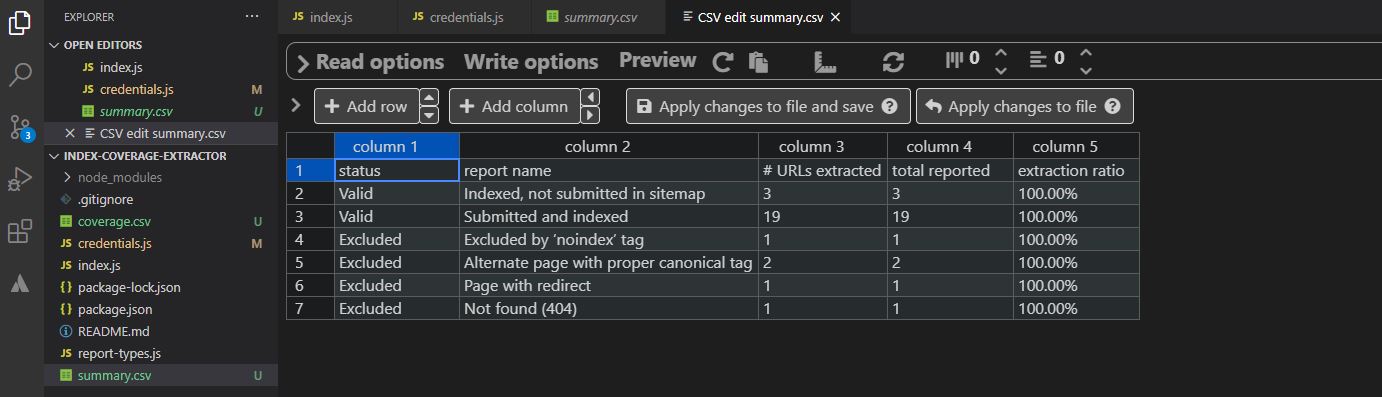

The script will create a "coverage.csv" file and a "summary.csv" file.

The "coverage.csv" will contain all the URLs that have been extracted from each individual coverage report.

The "summary.csv" will contain the amount of urls per report that have been extracted, the total number that GSC reports in the user interface (either the same or higher) and an "extraction ratio" which is a division between the URLs extracted and the total number of URLs reported by GSC.

I believe this extra data point is useful because GSC has an export limit of 1000 rows per report. Hence, the "extraction ratio" gives you an accurate idea of how many URLs you have been able to extract versus how many you are missing from that report.

Future updates

There are a few features that I think would be really nice to have for future releases. For example, extract the "update" date and modify the script as a Google cloud function to store the data in BigQuery only if the date is different to the previous date stored.

I know other SEOs are doing this kind of extraction already using Google Sheets which is very cool so I might give that a try. In the meantime, I hope that you find this script useful and if you have any thoughts hit me up on Twitter.