For those of you that have read my blog before, you might have seen that I'm slightly obsessed with getting index status data from Google...

Up until now if someone wanted to get indexing information from Google Search Console, you would have to automate the URL Inspection tool or extract it in bulk from the Index Coverage Report using a headless browser modules like Playwright or Puppeteer.

However, today our prayers have been answered!

The Google Search Console team has released an official endpoint to use the URL Inspection Tool from their API!

In this blog post, I'll show you how you can extract index status data in bulk through this new endpoint using my newly published script in Node.js

Before you run the script

Make sure that you have Node.js in your machine. At the time of writing this post I’m using version 16.4.2.

In this script I'm using a specific syntax that can only be used from version 14 onwards so double check that you are above that version.

# Check Node version

node -v

Download the script using git, Github’s CLI or simply downloading the code from Github directly.

# Git

git clone https://github.com/jlhernando/google-index-inspect-api.git

# Github’s CLI

gh repo clone https://github.com/jlhernando/google-index-inspect-api

Then install the necessary modules to run the script by typing npm install in your terminal

npm install

Update the urls.csv file with the individual URLs that you would like to check in the first column and the GSC property which they belong to. Keep the headers.

url,property

https://jlhernando.com/blog/how-to-install-node-for-seo/,https://jlhernando.com/

https://jlhernando.com/blog/index-coverage-extractor/,https://jlhernando.com/

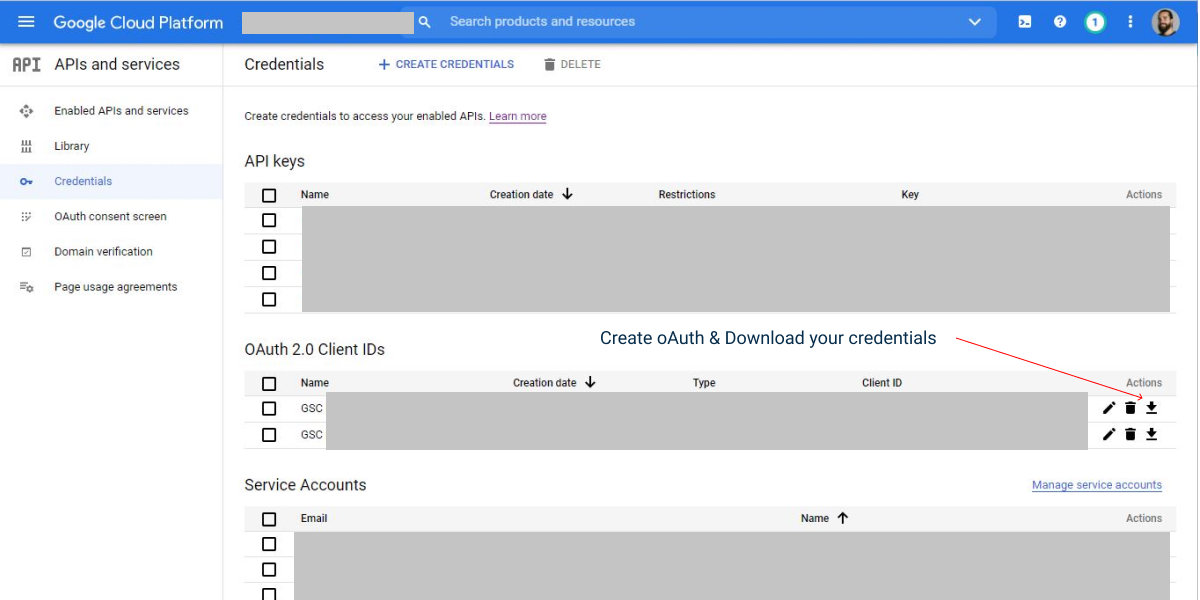

Update the credentials-secret.json using your own OAuth 2.0 Client IDs credentials from your Google Cloud Platform account.

Running the script from your terminal and expected output

To run the script from your terminal simply use the following command:

npm start

Progress messages

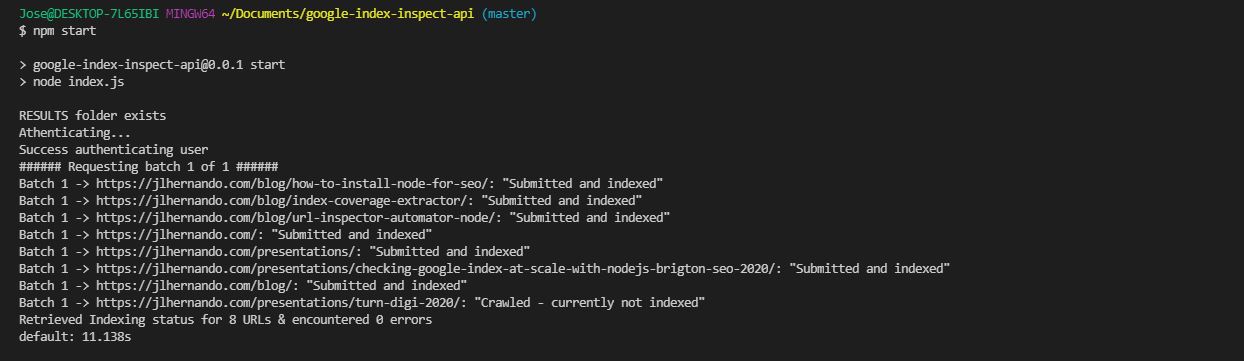

If the script is able to authenticate you from the URLs and properties you are trying to check you will see a series of progress messages:

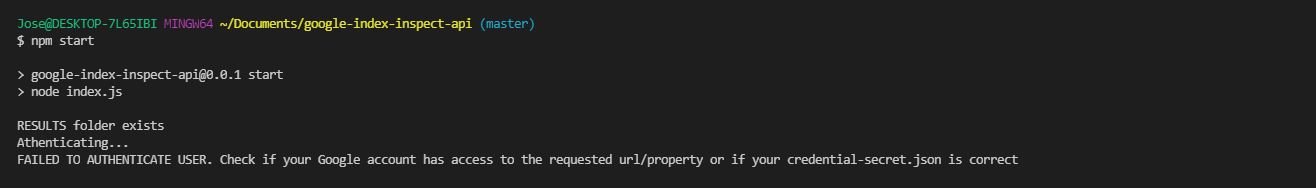

On the contrary if either your credentials don't match the set of URLs and properties you are trying to extract, you will receive a failed message and the script will stop.

Expected output

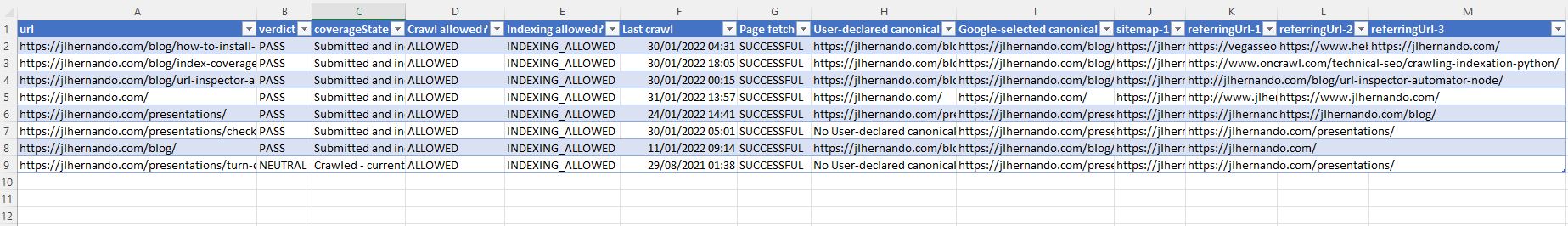

If the script has been successful in retrieving index status data, you will have a credentials.csv file and a credentials.json file under the RESULTS folder (unless you have changed the name in the script).

If there are any extractions errors, these will be in another file named errors.json

This is very much an MVP and there are many optimisations that could be done, but it does the job. Remember that GSC has a limit of 2000 calls per day and 600 calls per minute, so only upload up to 2000 URLs in your urls.csv file.

I hope that you find this script useful and if you have any thoughts hit me up on Twitter @jlhernando.